SEO tools are crucial for agencies to cater to their clients’ diverse needs and edge out the competition. This write-up reveals the top SEO tools for agencies in 2024.

Why are top-of-the-line SEO tools needed? Because they help agencies deliver better results to their clients and stay competitive. The right tools effectively carry out SEO audits, enhance SEO content optimization, and ensure thorough website optimization.

How to Choose the Right SEO Tool for Your Agency

Quality SEO tools can boost your search results, perform comprehensive site analysis and audits, improve search volume, and boost website accessibility.

With the right SEO software, you can ensure better website health and optimize content. For instance, over 70% of SEO professionals use SEO tools regularly for site audits. Moreover, it’s projected that the SEO software market will hit $5.5 billion in 2024. So, how do you select the ideal tool for your agency? Let’s explore:

- Identify Your Needs. Each agency has its own unique SEO needs based on its industry and clients. Understanding these needs is vital for choosing the right tool.

- Consider the Cost. The tool should be affordable and offer good value for money. Look at your budget and select a tool that offers maximum value that is within your price range.

- User Experience. Ease of use and efficient customer support are important factors. Select a tool with a user-friendly interface and reliable support.

Best SEO Tools in 2024: A Detailed List

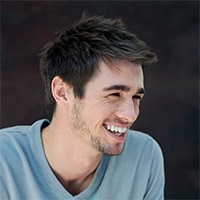

#1. SE Ranking

SE Ranking is a great SEO toolkit for agencies, big and small. Not only does it automate important website optimization tasks, but it also delivers clear-cut results. It’s flexible, user-friendly, and caters to a wide variety of SEO needs. Whether you’re looking for basic website optimization, in-depth competitor analysis, or articulate SEO reporting, SE Ranking has it all.

- Rank Tracker: Accurately track keyword rankings across different search engines and locations.

- On-Page SEO Checker: Gives you optimization tips for your web pages to better their search engine visibility.

- Website Audit: Find and fix SEO issues on your website.

- Competitive & Keyword Research: Learn from your competition and plan keywords better.

- Backlink Checker: Keep track of the quality and quantity of backlinks to your website.

- SEO Reporting: Generate detailed SEO performance reports for clients with the SEO report generator and send them manually or set custom delivery schedules.

- Integrations: Connect SE Ranking with Google Search Console, Google Analytics, Matomo Analytics, Looker Studio, and other tools. Use API to transfer data to third-party applications.

SE Ranking offers special features for agencies via its Agency Pack. This add-on includes:

- White Label SEO software

- Unlimited automated WL SEO reports

- 10 additional user seats to share access to the platform with your clients

- Access to Lead Generator with an opportunity to generate up to 100 leads per month

- Spot in SE Ranking’s exclusive Agency Catalog with your agency profile and link to your website.

Price Plan

- Essential Plan: $55.00/month for core SEO tools.

- Pro Plan: For $109/month, you get access to expanded data limits and easy collaboration.

- Business Plan: For $239/month, enjoy advanced data processing and priority support.

Keep in mind that Agency Pack is available at $50/month to users with annual Pro and Business subscriptions.

Each plan comes with a 20% discount for annual subscriptions.

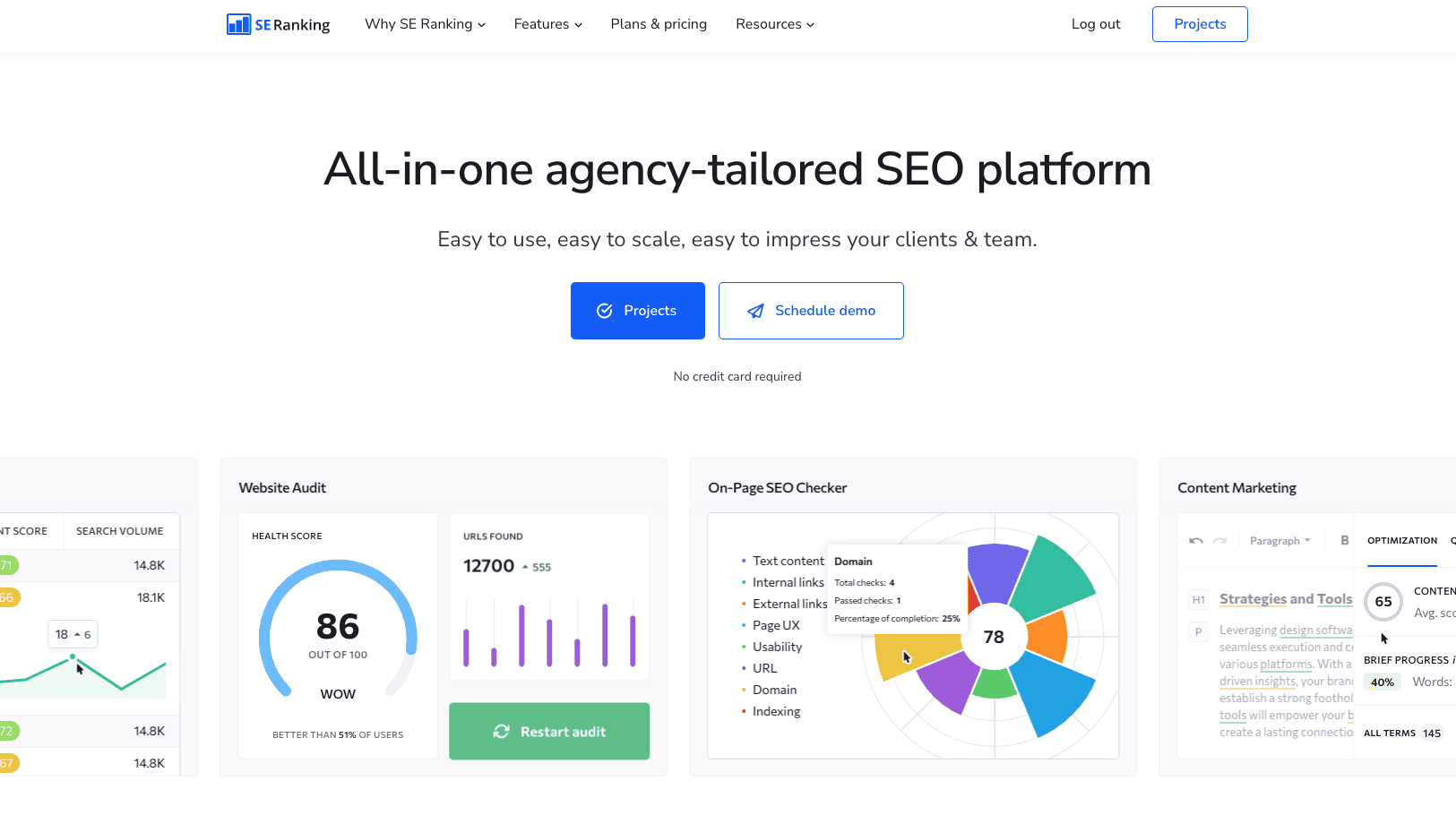

#2. AgencyAnalytics

AgencyAnalytics is perfect for handling multiple SEO projects. With flexible pricing and helpful features, it improves processes and ensures agencies can deliver superior service. It provides current data for shaping SEO plans and makes sharing analytics with clients look professional and well-presented.

- Custom Dashboards: Tailor your SEO view to display the most important data for each client.

- Regular Reporting: Easily automated reports make keeping clients in the loop a breeze.

- SEO Audit: Detailed reports pinpoint issues that may hinder website positioning.

- Keyword Monitoring: Tracking keyword rankings across various search engines and locales is simple.

- Backlink Checks: Follow and assess your client’s website backlinks.

- Integration: Connects with over 50 marketing tools, consolidating your data in one place.

Price Plan

- Freelancer: $12 per month per campaign, min 5 campaigns.

- Agency: $18 per month per campaign, min 10 campaigns.

- Enterprise: Pricing is custom for agencies requiring 50+ campaigns.

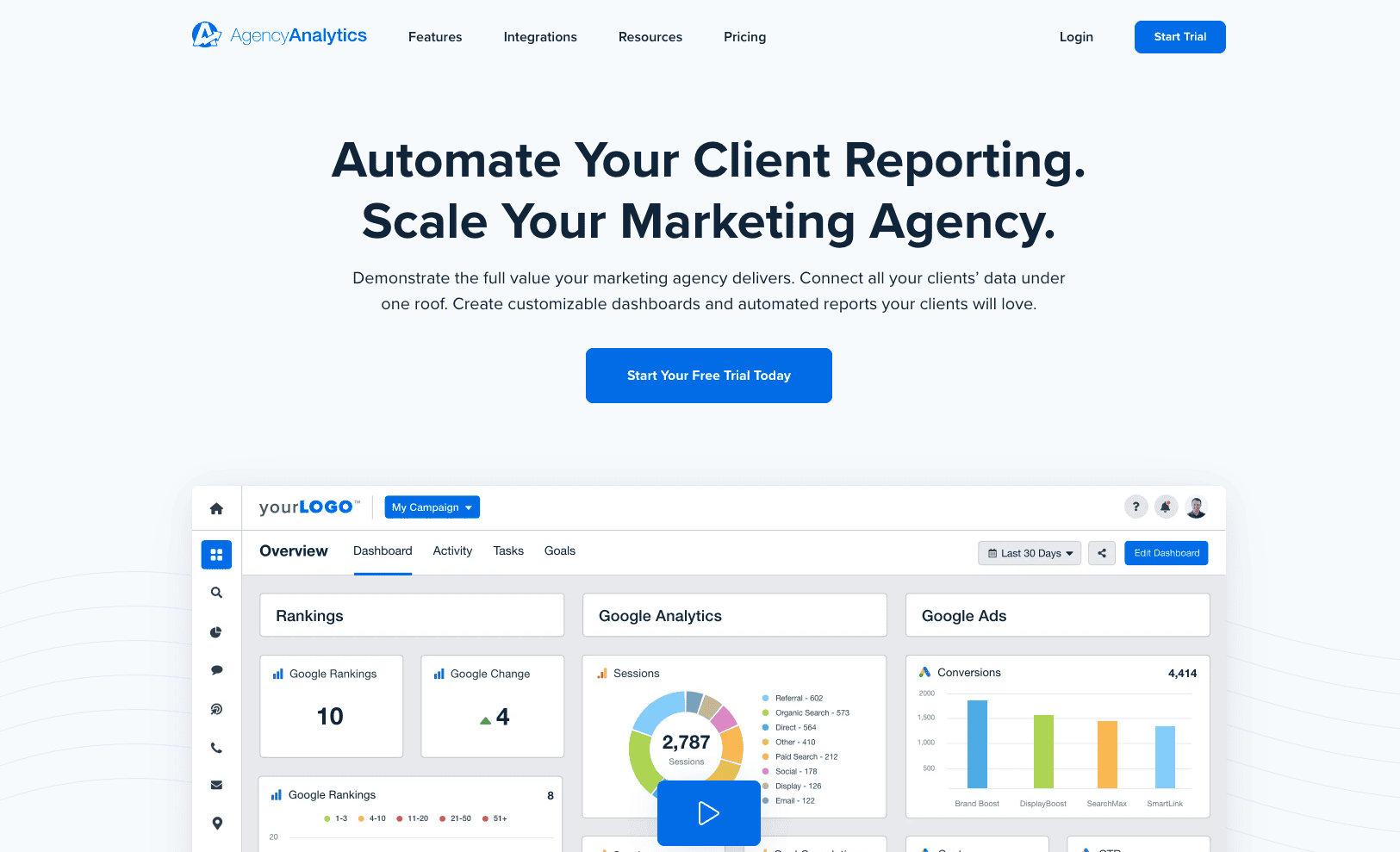

#3. Screaming Frog

Screaming Frog’s SEO Spider is a website crawler designed for thoroughly conducting SEO audits. Its ability to crawl numerous websites and provide immediate analyses makes it vital for SEO health checks.

- Efficient Crawling: Can digest both small and huge websites alike.

- SEO Auditing: Highlights common SEO problems like broken links, missing titles, and copied content.

- Custom Extraction: Pulls out data from the web page’s HTML.

- Integration: Merges easily with Google Analytics, Google Search Console, and PageSpeed Insights for better data analysis.

- Visualization: Maps out your site’s layout to spot areas that need improvement.

Price Plan

- Free Version: Allows up to 500 URLs to be crawled for free, which is perfect for small sites or quick health checks.

- Paid Version: For £199 per year, unlock unlimited crawling, added features, and priority customer support. Prices vary according to the number of licenses, with discounts available for buying more.

#4. Ahrefs

Ahrefs is an all-in-one SEO solution, that also meets the requirements of digital agencies. Ahrefs’ pioneering features like AI suggestions make it vital for agencies aiming to deliver top-quality SEO results.

- SEO Dashboard: Gives an overview of your SEO performance.

- Site Explorer: Offers in-depth site analysis and insights into broken backlinks.

- Keywords Explorer: Features AI suggestions to enhance keyword research.

- Site Audit: Thoroughly checks site health.

- Rank Tracker: Monitors search ranking progression.

- Competitor Analysis: Provides an in-depth look into how competitors are performing.

- Real-Time Tracking: Discovers arising SEO problems and opportunities instantaneously.

- Content Explorer, Batch Analysis, Web Explorer: Useful tools for planning and market research.

- Access Management and API: Offers more control and integration capabilities.

Price Plan

Ahrefs has several pricing options for agencies:

- Lite: $99/mo – Great for small businesses and personal projects.

- Standard: $199/mo – Ideal for freelance SEOs and consultants needing a wider range of data.

- Advanced: $399/mo – Suitable for large marketing teams requiring more data and tools.

- Enterprise: $999/mo – Suitable for larger firms needing comprehensive features.

When billed annually, you get two months free — a substantial saving over the monthly subscription.

#5. Semrush

Semrush is one of the top choices among SEO tools. Its extensive features and flexible pricing keep agencies at the top and offer deep insight into SEO, content marketing, and competitor resources.

- Competitor Analysis: Understand your competitors’ strategies better.

- Keyword Research: Identify important keywords to ramp up your content and ranking.

- Website Audit: Locate and fix your website’s SEO issues for better performance.

- Backlink Analysis: Check out the quality and number of your backlinks.

- Content Toolkit: Tweaks your content with special creation, optimization, and distribution tools.

- Historical Data: Go through old data to identify trends and make good decisions.

Price Plan

- Semrush Pro: For $129.95 a month, it provides 5 projects, tracking for 500 keywords, and 10,000 daily reports.

- Semrush Guru: For $249.95 per month it provides 15 projects, includes tracking for 1,500 keywords, and other extra features such as a content toolkit.

- Semrush Business: For $499.95 per month, it offers extensive project and keyword tracking, including API access.

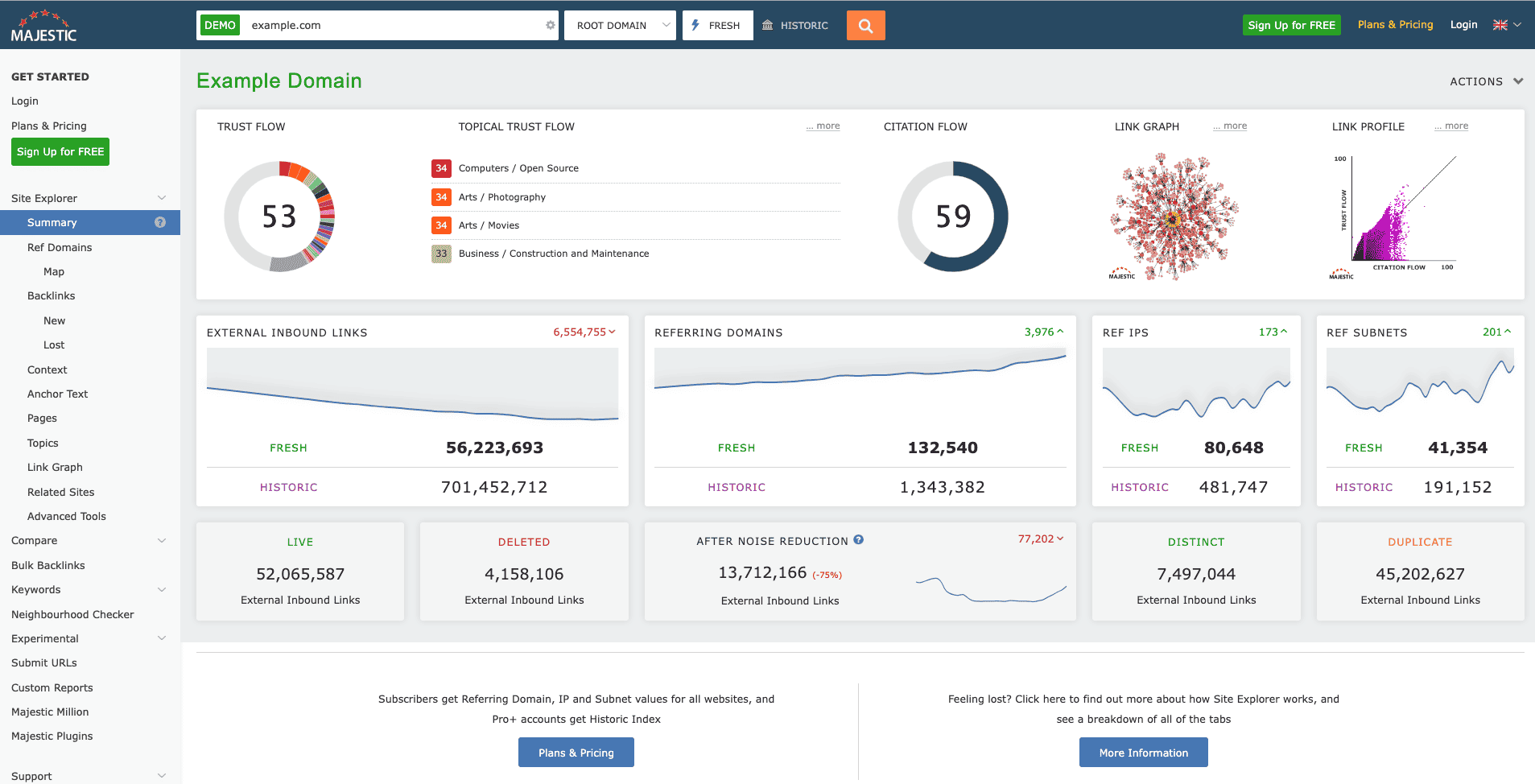

#6. Majestic

Majestic is the standard in link analysis tools, offering unmatched insights into the internet’s structure. Known for its comprehensive backlink data and SEO analytics, Majestic is a top choice for agencies looking to craft excellent link building strategies and perform thorough site audits.

- Fresh Index & Historic Index: Access up-to-date backlink data along with a broad historical archive for thorough analysis.

- Site Explorer: Dive deep into any site’s data to uncover key insights on backlinks, referring domains, and more.

- Trust Flow & Topical Trust Flow: Determine the value of backlinks and their relevance to specific topics to gauge site authority.

- Link Context & Link Graph: Understand each backlink’s context and visualize any site’s link network.

- Clique Hunter & Comparison Tools: Find link opportunities by comparing multiple sites’ backlink profiles.

Price Plan

- Lite Plan: $49.99 per month for access to the Fresh Index and essential tools for one user.

- Pro Plan: $99.99 per month for access to both Fresh and Historic Indexes, a greater number of analysis units, and advanced reporting features for one user.

- API Plan: $399.99 per month for five users’ support and extensive API capabilities.

#7. Moz Pro

Moz Pro focuses on enhancing search results and is user-friendly, making it a wise choice for agencies looking to win at SEO. With a focus on both off-page and on-page SEO, Moz Pro offers a comprehensive solution to enhance its clients’ online presence.

- Keyword Explorer offers in-depth keyword research and planning.

- Site Crawl examines websites for any issues that may harm search engine ratings.

- Rank Tracker keeps an eye on keyword rankings for different sites and gadgets.

- Link Explorer is a tool that analyzes your backlink profile and identifies link-building opportunities.

- Page Optimization offers suggestions on how to improve SEO to strengthen rankings.

Price Plan

- Standard Plan: $99/month for beginners or users who only need basic tools.

- Medium Plan: $179/month for generous limits and unrestricted access to keyword research tools.

- Large Plan: $299/month for businesses and agencies looking to upscale their SEO initiatives.

- Premium Plan: $599/month for large, SEO-conscious businesses and marketing teams requiring extensive features.

Conclusion

On average, companies spent $65 billion on SEO in 2024, so your choice can make or break your project budget. We highly recommend incorporating these online SEO tools into your agency’s day-to-day operations. You might find that they are the missing piece in your site analysis and SEO content optimization strategies.